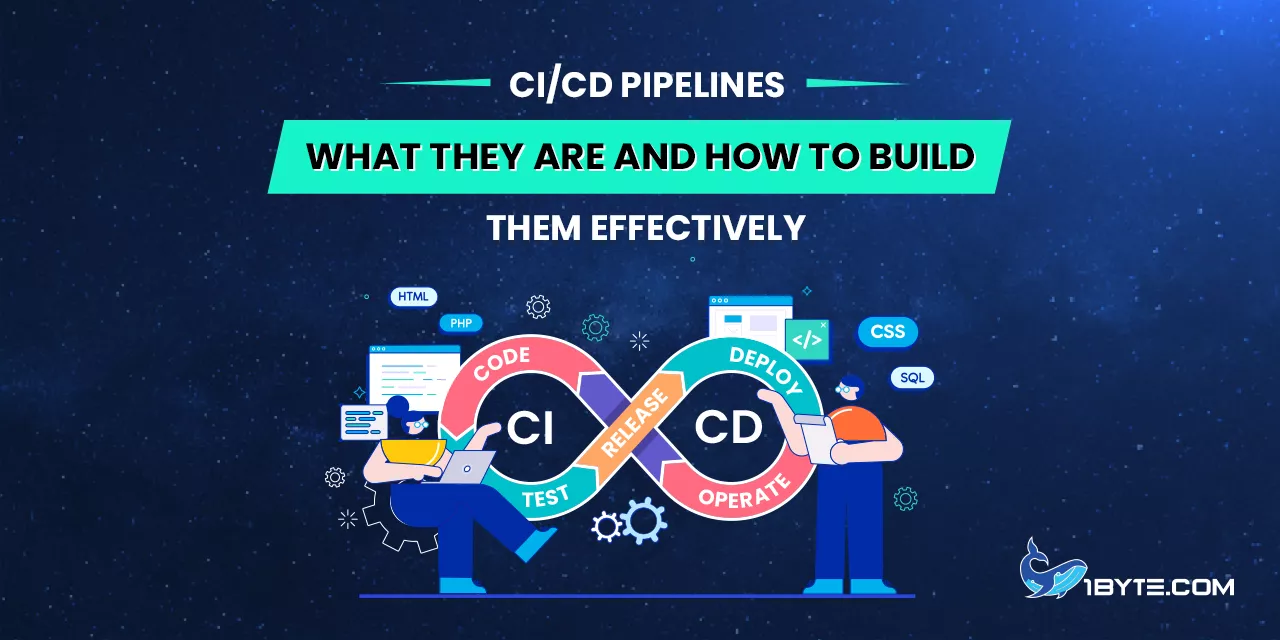

CI/CD pipelines automate the software delivery workflow. They relate the changes in the code to the production process by managing the builds, tests, and deployments in an automated process. Combining Continuous Integration (CI) and Continuous Delivery/Deployment (CD), pipelines enable teams to identify bugs at an early stage and release changes more quickly. A standard CI/CD pipeline begins with a commit to the code repository and finishes with a deployment to production, going through building, testing, and packaging steps in the process.

CI/CD pipelines bring many concrete benefits. They decrease the number of manual work and human error in the deployment process, which makes the releases more dependable. To illustrate, the pipeline integration approach can greatly minimize the chances of flaws in the deployment procedure and make sure that bugs are revealed during tests. The use of CI/CD leads to teams that are more responsive to user feedback, faster in releasing software, and of a higher quality. Indeed, studies indicate that the adoption of CI/CD tools is associated with the improvement of software delivery performance in all DevOps performance indicators. It implies that pipelines do not only save time but also enhance the overall quality of software and team productivity.

CI/CD is already adopted in organizations with high rates, which are increasing. In a recent survey by the Cloud Native Computing Foundation, 60% of organizations reported using CI/CD pipelines for most or all of their applications – up from 46% the year before. A different survey of developers revealed that 50 percent of developers are habitual users of CI/CD tools. These statistics bring out the fact that the practices of CI/CD are now common with contemporary DevOps teams. Read this article 1Byte to learn more.

What Are CI/CD Pipelines?

A CI/CD pipeline is an automated workflow that carries code changes from development to production. It typically includes steps for building the code, running automated tests, and deploying to an environment. According to GitLab, “CI/CD pipelines” are designed to “streamline the creation, testing and deployment of applications”. In practical terms, whenever a developer merges new code into the main repository (Continuous Integration), the pipeline automatically builds the application and runs tests. If the code passes all checks, the pipeline can automatically push the code forward for Continuous Delivery or Deployment.

It is fully automation in the sense that new features or repairs are passed through the pipeline with minimal or no human participation. Teams establish regulations and conditions in such a way that every commit initiates a run of a pipeline. Contemporary pipelines operate in phases and can consist of such steps as code compilation, unit tests, security scans, artifact packaging, and, lastly, staging or production. Since all steps are automated and repeatable, errors are detected at an earlier stage. According to one of the sources, pipelines contribute to the enhancement of software quality and accelerated delivery due to frequent, consistent updates. This assists development teams to concentrate on code writing as opposed to manual release.

An effective pipeline design contains a number of fundamental components. It typically begins in a version control system (such as Git) in which every commit initiates the pipeline. An automated build phase is then used to compile the code and generate an executable or container image. Then, automated testing phases are performed where unit tests, integration tests, and other quality checks are carried out. In case of failure of any of the tests, the pipeline halts and alerts the team. Lastly, a deployment phase is one that deploys the build artifacts to a target environment (staging or production server). During the process, the pipeline gives feedback and thus developers are aware immediately whether a change has broken the build or failed tests.

Benefits of CI/CD Pipelines

CI/CD pipelines offer clear, measurable gains for development teams and businesses. The most obvious benefit is faster, more frequent releases. By automating manual steps, pipelines let teams push updates in small batches rather than waiting for big, risky releases. This speeds up the time from code completion to user delivery. In fact, teams that adopt CI/CD often see their time-to-market improve significantly. For example, after building a CI/CD pipeline, Etsy cut its deployment time from hours to mere minutes and now does dozens of releases per day.

Fewer bugs in production also imply that testing and deployment is automated. Bugs are identified fast when all commits are run through a set of automated tests. As one report notes, CI/CD pipelines ensure “bugs are caught early and fixed promptly,” which maintains high software quality. This results in more satisfied customers, as there is less errors being passed to the end users. The releases are less risky and better tested, which leads to higher user satisfaction and confidence being reported by companies when releasing via pipelines.

The other major advantage is that there is less manual workload. A big part of time is usually spent by developers manually developing, testing and deploying code. According to one source, the automation of such processes liberates the developers to work on new features: it enhances the developer experience and productivity. This has the potential to reduce context switching and burnout as developers can use their time to code instead of doing repetitive work. As an example, studies have indicated that the implementation of CI/CD practices can be used to decrease deployment pain and team burnout in a measurable way.

Lastly, pipelines increase the visibility and predictability of teams. When there is a pipeline, teams can be aware of the code in each environment and can trace the progression of the code through each step. This openness allows managers to make more accurate estimates of the release dates and teams to release any time they want to. The small releases (facilitated by pipelines) also imply that in case a bug does creep through, it is easier to isolate and fix. To the point, pipelines establish a consistent, predictable procedure in order to make deployments low-risk and routine.

Core Components of CI/CD Pipelines

Building a CI/CD pipeline involves combining several tools and practices. The main components include:

- Version Control and Source Repositories. A single source of truth (like a Git repository) is critical. All code, build scripts, and configuration files live in version control. This is where each code change (commit or merge) triggers the pipeline. The pipeline should be configured to use the latest code from the main branch whenever it runs.

- Build Automation. The pipeline needs to code or put together code into a deliverable form. This may be in the form of code compilation, container image creation or asset bundling. Build tools (such as Maven, Gradle or Docker) are written in such a way that the build process can be completed in a single command.

- Automated Testing. CI/CD pipeline is based on continuous testing. The pipeline executes unit tests, integration tests and in many cases, the tools of static analysis. The aim is to justify every change within a short time. Automated tests must be applied immediately when a commit has been pushed to detect bugs or regressions. Test results are a gate: in case of tests failure the pipeline will stop and notify the team. The practice will significantly decrease the number of broken codes that reach production.

- Deployment Automation. The last process is to automate the delivery of code to target environments. Continuous Delivery refers to the process of packaging and preparing the artifact to be deployed at any point, whereas Continuous Deployment is the process of deploying the artifact automatically. Deployment automation can be container orchestration (such as Kubernetes or Docker) or infrastructure-as-code to create either a test or a production environment on demand.

- Monitoring and Feedback. Checks and feedback are incorporated in good pipelines. Monitoring tools can be used to ensure that the application is functioning as it should after it has been deployed to a staging or production environment. Any malfunction raises alarms to enable the team to respond. This feedback loop will make sure that pipelines are not just deployed, but also make sure that deployments were successful in the real world.

Additional stages can be added to each pipeline, including security scanning or performance testing, but these core stages code, build, test, deploy will always exist. The general trend is to separate the pipeline into various environments (development, staging, production) and only promote the builds that have successfully passed all tests in one stage to the next one. In the meantime, pipeline scripts are maintained in a source control to ensure that the pipeline is versioned and reviewable.

How to Build CI/CD Pipelines

Building an effective CI/CD pipeline requires careful planning and steps. Teams typically start with a small pipeline and expand it over time. A general process to follow is:

- Define Requirements. Decide what you want your pipeline to do. Identify which tests to run, which environments to deploy to, and what the approval process is (e.g., manual approvals before production deployment).

- Choose Tools. Pick a CI/CD platform that fits your environment (see next section). Tools range from cloud services like GitHub Actions and GitLab CI to self-hosted servers like Jenkins or Azure DevOps. Many tools offer pre-built integrations for version control, container registries, and notifications.

- Set Up Version Control Integration. Connect your chosen CI tool to your code repository. Configure it so that pushes or pull-request merges to main branch automatically trigger the pipeline.

- Create Build and Test Scripts. Write scripts that compile or package your code. Also, create automated test scripts (unit tests, integration tests, etc.). These scripts should run successfully on a local machine and then be included in the pipeline configuration.

- Define Pipeline Configuration. Most CI/CD tools use a YAML or similar config file that describes pipeline stages. For example, you might define stages like “build,” “test,” and “deploy,” each with the commands to run. This config file is stored in the repository so the pipeline is reproducible.

- Add Testing and Quality Gates. Integrate code quality tools (linting, vulnerability scanning, static analysis). Include tests at each commit. If a test fails, configure the pipeline to stop and notify developers immediately.

- Configure Deployment Steps. Automate deployment to your target environment. This could mean pushing Docker containers to a registry and then updating Kubernetes, or calling cloud APIs to deploy an artifact. Ensure credentials for deployment are handled securely (e.g. using secrets management).

- Set Up Notifications and Feedback. Integrate notifications (Slack, email, etc.) so the team is alerted of pipeline failures or successes. Establish dashboards or reports to track pipeline health and test coverage.

- Iterate and Improve. After the initial setup, monitor performance. Tweak timings, add parallelism to speed builds, or refactor complex scripts. Over time, add more tests (security scans, performance tests) and consider branching strategies (like using feature branches with dedicated pipelines).

- Enforce Best Practices. Use trunk-based development or merge small changes frequently into the main branch. Keep pipeline stages fast by parallelizing tests. Ensure testing environments mimic production as much as possible. Make the pipeline itself reliable so teams can trust it.

Through these steps, effective and low-friction pipelines can be constructed by the teams. It is important to keep in mind that the construction of pipelines is gradual. Begin small (even with simple tests) and incrementally to full delivery. Besides, engage your team to design the pipeline in accordance with your project and work processes.

Popular CI/CD Tools

Many tools exist to help build CI/CD pipelines. Your choice depends on your tech stack, scale, and team preference. Some of the most widely used include:

- GitHub Actions. A cloud CI/CD service tightly integrated with GitHub. It’s very popular for projects hosted on GitHub: roughly 62% of developers use GitHub Actions for personal projects, and 41% in professional settings. It’s easy to get started with Actions because it directly hooks into GitHub events (like pushes and pull requests).

- Jenkins. A self-hosted automation server is open-source and many years old. Jenkins is supported by its own Domain Specific Language (Jenkinsfile) and hundreds of plugins. Jenkins is popular in many large organizations due to its flexibility. It is still popular in businesses and it helps to facilitate complicated processes.

- GitLab CI/CD. A component of the integrated suite of GitLab. It reads a YAML file (.gitlab-ci.yml) in the repo to describe jobs and stages. It is an easy option when a team is already using GitLab as a source control, as it is easily integrated and has runners to run jobs.

- CircleCI, Travis CI, and TeamCity. Other CI/CD services (some cloud, some hybrid) that are frequently used by projects include these. They have hosted or on-premise solutions that trade off on cost and configuration.

- Azure Pipelines. Microsoft cloud-based CI/CD service, which is a component of Azure DevOps. It is also known to be well integrated with the Microsoft ecosystem, but is compatible with numerous platforms. It also has generous free levels.

- Spinnaker, Argo CD, Flux. These tools are more CD side (deployments). Multi-cloud deployments are managed by Spinnaker (open-source by Netflix). Argo CD and Flux are Kubernetes-based GitOps tools, in which the pipeline is activated by modifications to GitOps repositories.

According to a CNCF survey, the most-used CI/CD pipeline tools in 2024 included GitHub Actions (51% of respondents), Jenkins (42%), Argo (39%), and GitLab (29%). All showed significant growth year-over-year. This indicates that while the ecosystem is varied, cloud-native and container-friendly tools are on the rise.

There are also numerous tools that are used simultaneously in many organizations. An industry survey conducted in 2025 has revealed that 32 percent of companies have two different CI/CD systems, and 9 percent have three or more. This multi-tool reality is usually based on old systems, preferences of the team or even the requirements of a particular project. As an example, a team could have simple builds on GitHub Actions but continue using Jenkins to have complex legacy deployment processes.

Best Practices for CI/CD Pipelines

To build effective pipelines, follow these best practices:

- Keep Builds Fast and Reliable. Speed is key. Slow pipelines frustrate developers. Use techniques like test parallelization and incremental builds to shorten run time. Only build what changed. Consider container or VM caching.

- Use Trunk-Based Development. Encourage small, frequent merges into the main branch to avoid large, risky integrations. This aligns with CI philosophy of integrating early and often.

- Automate Everything. As much as possible, let the pipeline handle tasks. Remove manual steps in building, testing, and deploying. For example, automate database migrations and environment provisioning so they are repeatable.

- Isolate Environments. Test in environments that mimic production. Use on-demand environments or container snapshots. Ensure that each pipeline run has a clean environment to avoid “it works on my machine” issues.

- Include Security Checks (DevSecOps). Shift security left by integrating tools that scan for vulnerabilities in code and dependencies during the pipeline. For example, run static code analysis, dependency checks, and container image scanning as part of your test stages.

- Manage Secrets Securely. Never hard-code credentials in pipeline scripts. Use secrets management (like encrypted variables or vault systems) to inject credentials at runtime.

- Monitor Pipeline Health. Track metrics such as build success rate, test coverage, and mean time to recovery. Alert the team proactively when the pipeline fails or tests frequently break.

- Maintain the Pipeline as Code. Store pipeline definitions in version control. Review changes to the pipeline configuration as rigorously as application code. This ensures any update to the pipeline is tracked and auditable.

- Provide Clear Feedback. When a build or test fails, send concise information so developers can quickly fix issues. Good notifications reduce the time to resolution.

- Parallelize and Distribute. If your CI/CD tool supports it, run independent tasks in parallel. Modern cloud runners make it easy to scale out. This ensures long test suites do not hold up the pipeline.

With these practices, teams develop strong pipelines, which can be extended with the growth of the project. According to the JetBrains survey, switching CI/CD tools is a challenging task and thus take time to set up and optimize your pipeline properly at the beginning. A gradual migration can see the teams operating legacy and new pipelines simultaneously. The lesson learned is that pipelines are supposed to change as the project advances and become better with time.

CI/CD Pipelines in Action: Real-World Examples

Real companies showcase how CI/CD pipelines accelerate development:

- Netflix. The streaming giant deploys thousands of code changes every day using CI/CD pipelines. They have moved to microservices so that the teams can deploy services on their own. Multi-cloud deployments at Netflix are automated with Spinnaker (an open-source CD platform that Netflix created). Any change of codes undergoes automated testing and canary releases. Chaos engineering (similar to Chaos Monkey) is also practiced by Netflix to be reliable. The final outcome is that Netflix is able to roll out new features on a continuous basis with minimum disruptions. Their embracing of CI/CD has had a direct positive effect on reliability and time to market.

- Etsy. This online store relies on CI/CD to make changes to its webpage as quickly and securely as possible. Through automation of builds, tests and deployments, Etsy was able to move to more than 50 deploys a day compared to hourly releases. Their pipeline consists of a lot of automated testing and feature toggles to introduce changes in a step-by-step manner. Consequently, deployment times reduced to minutes and the team is able to react to the market needs virtually real-time. Such agility is essential in a retail environment that moves very fast.

- Google. Google runs one of the largest CI/CD pipelines in the world. Their code is managed by a monorepo and they have a tool known as Bazel which provides fast and parallel builds and tests. The build system at Google does millions of builds and tests per day providing developers with immediate feedback. Canary releases are deployed to make sure that things are stable. This infrastructure enables the thousands of engineers at Google to roll out changes numerous times daily across the global services.

- Other Examples. Such companies as Amazon and Facebook are structured in a similar way, with microservices and a high degree of automation. Smaller startups also use CI/CD: an example is a team, which can use GitHub Actions to run tests and then automatically deploy to a Kubernetes cluster or cloud service.

These case studies point out that pipelines are scaled to large companies and startups. The essence of the theme is the same in all the examples: end-to-end software lifecycle automation.

CI/CD Pipelines Trends and Future

CI/CD pipelines continue to evolve with new trends:

- Cloud-Native and Containers. Pipelines are becoming container-based. Container images are artifacts that are created by many teams. Cloud pipelines have become popular with cloud providers and Kubernetes. This change is reflected in a survey that indicated that 41% of developers are using cloud-hosted CI, and 34% of them are using cloud-based CD tools. Managed CI/CD services (e.g. GitHub Actions, GitLab CI, Azure Pipelines) eliminate infrastructure headache, allowing teams to work on code.

- AI and Automation. Artificial intelligence begins to penetrate CI/CD. Pipelines can be configured to have automated code review, test generation, and even anomaly detection. As an example, AI can be used to predict flaky tests or propose fixes to failed builds. This trend is still in its early stages but it will probably transform pipelines into smarter devices and assist developers further in the future.

- Security Integration (DevSecOps). Security has become a normal aspect of pipelines. Automatic pipeline stages usually include Static Application Security Testing (SAST), Dynamic testing and software bill-of-materials generation. The drive to shift left security is that more and more pipelines have threat scans prior to deployment.

- GitOps Practices. In the case of Kubernetes and cloud deployments, a GitOps is increasingly becoming popular. GitOps deployments have a deployment pipeline that tracks a Git repository of Kubernetes manifests and synchronizes modifications. Examples of tools such as Argo CD and Flux include CI/CD being run through pull requests to a deployment repo.

- Metrics-Driven Improvement. Teams use DORA metrics (deployment frequency, lead time, MTTR, change failure rate) to measure pipeline effectiveness. According to industry reports, teams that invest in CI/CD generally score higher on these metrics. For instance, the CD Foundation report highlights that teams using CI/CD tools achieve significantly better deployment frequency and faster lead times.

- DevOps Culture. Beyond technology, CI/CD pipelines reinforce a culture of collaboration. There is a shared pipeline between the developers and operations. The DevOps trend has become almost universal nowadays one report indicated that 83 percent of developers are involved in DevOps practices. This cultural change focuses on automation, shared responsibility and continuous improvement around the pipeline.

In the future, CI/CD is becoming standardized. As it has become widely adopted (60% of organizations utilize it on a broad basis), it is now regarded as a baseline capability and not a niche practice. It is probable that in the future pipelines will be even more plug-and-play, secure, and intelligent.

Leverage 1Byte’s strong cloud computing expertise to boost your business in a big way

1Byte provides complete domain registration services that include dedicated support staff, educated customer care, reasonable costs, as well as a domain price search tool.

Elevate your online security with 1Byte's SSL Service. Unparalleled protection, seamless integration, and peace of mind for your digital journey.

No matter the cloud server package you pick, you can rely on 1Byte for dependability, privacy, security, and a stress-free experience that is essential for successful businesses.

Choosing us as your shared hosting provider allows you to get excellent value for your money while enjoying the same level of quality and functionality as more expensive options.

Through highly flexible programs, 1Byte's cutting-edge cloud hosting gives great solutions to small and medium-sized businesses faster, more securely, and at reduced costs.

Stay ahead of the competition with 1Byte's innovative WordPress hosting services. Our feature-rich plans and unmatched reliability ensure your website stands out and delivers an unforgettable user experience.

As an official AWS Partner, one of our primary responsibilities is to assist businesses in modernizing their operations and make the most of their journeys to the cloud with AWS.

Conclusion

CI/CD pipelines are the backbone of modern software delivery. They provide a definite road to expedited releases, quality and efficient teams. Pipelines reduce errors and bottlenecks in the process of making builds, tests, and deployments that are manual. Recent statistics indicate that the majority of development teams are currently based on CI/CD practices, and their adoption is increasing every year.

To create an efficient CI/CD pipeline, it is necessary to select the appropriate tools, establish steps of the build and test, and repeat the process. The teams are supposed to adhere to the best practices such as trunk-based development, test automation, and security checks. The practical experience of Netflix, Etsy, Google and others proves the effectiveness of powerful pipelines: hundreds or thousands of changes are implemented by these companies every day without fear.

As CI/CD pipelines continue to mature with trends like cloud-native tools and AI assistance, they will remain essential for any organization seeking fast, reliable software delivery. Adopting CI/CD pipelines effectively means creating a culture of automation and continuous improvement—one that delivers value to users at unprecedented speed.